Agenda¶

- Getting Started

- Jupyter Notebook

- Pandas imports

- Pandas dataframes

- Pandas data cleaning and preprocessing

- Pandas descriptive statistics

- Data plotting

- Full example

Mix of theory and hands-on practise

Please go to http://tiny.cc/4c33gz

Getting started - section 1¶

Expectations: "Introduction to Python programming" or similar basic python experience.

Installing software¶

- python - https://www.python.org/downloads/

- anaconda: includes python and all the data-science libraries used in this workshop (numpy, pandas, matplotlib, seaborn) - https://www.anaconda.com/distribution

- pycharm: IDE for python development - https://www.jetbrains.com/pycharm/download

Pycharm¶

- Integrated Development Environment for python

- Simplifies writing and running python code

- Available for Windows, Mac and Linux

- Available in Professional, Community and Educational edition. Community (free) editon has everything you need.

Pycharm¶

Pycharm¶

But not today...¶

Will use online version of jupyter notebook, so no local installation required.

Jupyter notebook foundamental - Section 2¶

What's a jupyter notebook?¶

"jupyter" + "notebook"

- "jupyter": "acronym" from Julia + python + R (though many more languages are now supported)

- "notebook": collection of text, code, plots for an analysis (literate programming)

Jupyter project: https://jupyter.org/index.html

Gallery of interesting jupyter notebooks: https://github.com/jupyter/jupyter/wiki/A-gallery-of-interesting-Jupyter-Notebooks

Anatomy of a jupyter notebook¶

- Notebook consists of a series of "cells"

- Each cell can have text (markdown) or code (in our case, python).

- A cell can be run by selecting it and pressing

button at the top (or pressing ctr-enter)

button at the top (or pressing ctr-enter) - When the cell is run, the output is shown underneath:

- Simple expressions

2+2

4

- Comments

# Lines that start with a # are comments.

- Store data in variables

a = 2 + 2

b = 1 + 1

a + b

6

- Cells can reference variables from prevoius cells (make sure to run the previous cells first!):

a - b

2

Where can I find this material?¶

- Presentation and code materials at https://bitbucket.org/manuela_s/python_workshop/

- Binder version at http://tiny.cc/4c33gz

- Any question, comment, come chat to me or email me at manuelasalvucci@rcsi.ie

Practise¶

- Go to http://tiny.cc/4c33gz

- Click on "02_jupyter_notebook_practise.py"

- Play around

Continue in 5 min.

Pandas import - section 3¶

Pandas library¶

pandas is a Python package providing fast, flexible, and expressive data structures designed to make working with structured (tabular, multidimensional, potentially heterogeneous) and time series data both easy and intuitive**. It aims to be the fundamental high-level building block for doing practical, real world data analysis in Python. Additionally, it has the broader goal of becoming the most powerful and flexible open source data analysis / manipulation tool available in any language. It is already well on its way toward this goal. (From https://pypi.org/project/pandas/)

"the name is derived from the term “panel data”, an econometrics term for multidimensional structured data sets." (Wikipedia)

Pandas library¶

pandas is well suited for many different kinds of data:

- Tabular data with heterogeneously-typed columns, as in an SQL table or Excel spreadsheet

- Ordered and unordered (not necessarily fixed-frequency) time series data.

- Arbitrary matrix data (homogeneously typed or heterogeneous) with row and column labels

Any other form of observational / statistical data sets. The data actually need not be labeled at all to be placed into a pandas data structure

The two primary data structures of pandas, Series (1-dimensional) and DataFrame (2-dimensional), handle the vast majority of typical use cases in finance, statistics, social science, and many areas of engineering. For R users, DataFrame provides everything that R’s data.frame provides and much more. From https://pypi.org/project/pandas/

Example of data import with pandas¶

import os

import pandas

input_filename = os.path.join('data', 'countries.xls')

countries = pandas.read_excel(input_filename, index_col='Country')

countries

| Population | Area | Capital | |

|---|---|---|---|

| Country | |||

| Ireland | 4784000 | 84421 | Dublin |

| Italy | 60590000 | 301338 | Rome |

| Germany | 82790000 | 357386 | Berlin |

Step by step¶

Importing libraries¶

import os

import pandas

- Built-in libraries (like "os") is already installed and can just be imported. List of built in modules: https://docs.python.org/3/py-modindex.html

- External libraries (like pandas) need to be installed first. Can be installed from the python package index (https://pypi.org/) with the pip command. If you installed python with anaconda (https://www.anaconda.com/), pandas and other data-science packages are aleady installed.

- Makes library available in the "pandas" namespace. Functions from the pandas library can be called as pandas.(function_name), for example

pandas.read_excel() - Alternative import forms:

from pandas import read_excelandread_excel()from pandas import *andread_excel()import pandas as pdandpd.read_excel()

Importing data¶

read_excel¶

pandas.read_excel(io, sheet_name=0, header=0, names=None, index_col=None, usecols=None, squeeze=False, dtype=None, engine=None, converters=None, true_values=None, false_values=None, skiprows=None, nrows=None, na_values=None, keep_default_na=True, verbose=False, parse_dates=False, date_parser=None, thousands=None, comment=None, skip_footer=0, skipfooter=0, convert_float=True, mangle_dupe_cols=True, **kwds)

- full documentation at https://pandas.pydata.org/pandas-docs/stable/reference/api/pandas.read_excel.html

Filename (aka io)¶

input_filename = os.path.join('data', 'countries.xls')

input_filename

'data/countries.xls'

Basic:

countries.xls- file in the current directory

Sub-directories:

data/countries.xls- file in thedatasub-directory (linux/mac)data\countries.xls- file in thedatasub-directory (windows)os.path.join('data', 'countries.xls')- file in thedatasub-directory (any os)

Can also import "directly" from a URL:

Setting index/indices¶

Basic:

index_col=0orindex_col='Country'

Can set multiple indices:

- for example

index_col=[0,3]orindex_col=['Country', 'Capital']

No necessary to set an index, however setting index/indices allows for:

- more performant and simpler look-ups, for example

countries.loc['Ireland'] - easier joins between dataframes (will use index/indices by default if what to join on is not specified)

- separation between metadata (index/indices) and data (regular columns) which makes data manipulation easier (

countries.stack(),countries.describe', ...)

Rule of thumbs: set metadata as index

Other pandas imports¶

read_csv: read from flat file (use separator ',' (default), ';' and '\t' to read different text files)read_sql: import data from SQL databaseread_spps: import from SPPS fileread_stata: import from stataread_pickle: read from python object serialization files.- ...many more

See full documentation at https://pandas.pydata.org/pandas-docs/stable/reference/io.html

Import from MATLAB and R¶

- No native support from pandas

- Can be imported with 3rd party packages:

- MATLAB .mat files: https://scipy-cookbook.readthedocs.io/items/Reading_mat_files.html

- R .rds or .Rdata: https://github.com/ofajardo/pyreadr

Building a dataframe directly in pandas¶

Make a dictionary with the data to include in the dataframe

data = {

'Country': ['Ireland', 'Italy', 'Germany'],

'Population': [4784000, 60590000, 82790000],

'Area': [84421, 301338, 357386],

'Capital': ['Dublin', 'Rome', 'Berlin'],

}

Add the data into the dataframe

countries = pandas.DataFrame(data)

countries

| Country | Population | Area | Capital | |

|---|---|---|---|---|

| 0 | Ireland | 4784000 | 84421 | Dublin |

| 1 | Italy | 60590000 | 301338 | Rome |

| 2 | Germany | 82790000 | 357386 | Berlin |

Building a dataframe directly in pandas¶

Set indices (optional).

Note inplace=True (alternatively countries = countries.set_index(['Country', 'Capital']))

countries.set_index(['Country', 'Capital'], inplace=True)

countries

| Population | Area | ||

|---|---|---|---|

| Country | Capital | ||

| Ireland | Dublin | 4784000 | 84421 |

| Italy | Rome | 60590000 | 301338 |

| Germany | Berlin | 82790000 | 357386 |

Pandas export¶

countries.to_excel(filename, ...)countries.to_csv(filename, ...)countries.to_sql(filename, ...)countries.to_stata(filename, ...)countries.to_pickle(filename, ...)

Alternatives to pandas for heavy datasets¶

- Almost drop-in pandas replacement:

- dask: https://dask.org/

- datatable: https://github.com/h2oai/datatable

Practise¶

- Go to http://tiny.cc/4c33gz

- Click on "03_pandas_import_practise.py"

- Play around

Continue in 10 min.

Pandas dataframe foundamentals - section 4¶

The iris dataset¶

- The iris dataset (https://en.wikipedia.org/wiki/Iris_flower_data_set) was first described by Fisher in 1936

- It includes measurements for 4 flower characteristics (length and width for petals and sepals) for 150 flowers from 3 different iris species

The iris dataset¶

Let's explore the iris dataset¶

To load the data, we do:

import os

import pandas

iris = pandas.read_csv(os.path.join('data', 'iris.csv'))

Let's explore the iris dataset¶

The dataframe has columns with column headers

| sepal length (cm) | sepal width (cm) | petal length (cm) | petal width (cm) | species | |

|---|---|---|---|---|---|

| 0 | 5.1 | 3.5 | 1.4 | 0.2 | setosa |

| 1 | 4.9 | 3 | 1.4 | 0.2 | setosa |

| 2 | 4.7 | 3.2 | 1.3 | 0.2 | setosa |

| 3 | 4.6 | 3.1 | 1.5 | 0.2 | setosa |

| 4 | 5 | 3.6 | 1.4 | 0.2 | setosa |

Use iris.columns to get the column names (returns a pandas Index object)

iris.columns

Index(['sepal length (cm)', 'sepal width (cm)', 'petal length (cm)',

'petal width (cm)', 'species'],

dtype='object')

Let's explore the iris dataset¶

Each column represents 1 variable (i.e. 1 type of attribute/characteristic/feature)

| sepal length (cm) | sepal width (cm) | petal length (cm) | petal width (cm) | species | |

|---|---|---|---|---|---|

| 0 | 5.1 | 3.5 | 1.4 | 0.2 | setosa |

| 1 | 4.9 | 3 | 1.4 | 0.2 | setosa |

| 2 | 4.7 | 3.2 | 1.3 | 0.2 | setosa |

| 3 | 4.6 | 3.1 | 1.5 | 0.2 | setosa |

| 4 | 5 | 3.6 | 1.4 | 0.2 | setosa |

Select a single column by doing iris.[column_name] or iris.column_name (if no special characters). returns a pandas series object.

iris['sepal length (cm)']

0 5.1

1 4.9

2 4.7

3 4.6

4 5.0

...

145 6.7

146 6.3

147 6.5

148 6.2

149 5.9

Name: sepal length (cm), Length: 150, dtype: float64

Let's explore the iris dataset¶

| sepal length (cm) | sepal width (cm) | petal length (cm) | petal width (cm) | species | |

|---|---|---|---|---|---|

| 0 | 5.1 | 3.5 | 1.4 | 0.2 | setosa |

| 1 | 4.9 | 3 | 1.4 | 0.2 | setosa |

| 2 | 4.7 | 3.2 | 1.3 | 0.2 | setosa |

| 3 | 4.6 | 3.1 | 1.5 | 0.2 | setosa |

| 4 | 5 | 3.6 | 1.4 | 0.2 | setosa |

Use iris.index to get the index of the table. The index is like a special column that can be used for easy lookups. One of the data-columns can be set to be the index, or by default a simple range-index is used.

iris.index

RangeIndex(start=0, stop=150, step=1)

Let's explore the iris dataset¶

Each row represents 1 observation (i.e. 1 sample/flower)

| sepal length (cm) | sepal width (cm) | petal length (cm) | petal width (cm) | species | |

|---|---|---|---|---|---|

| 0 | 5.1 | 3.5 | 1.4 | 0.2 | setosa |

| 1 | 4.9 | 3 | 1.4 | 0.2 | setosa |

| 2 | 4.7 | 3.2 | 1.3 | 0.2 | setosa |

| 3 | 4.6 | 3.1 | 1.5 | 0.2 | setosa |

| 4 | 5 | 3.6 | 1.4 | 0.2 | setosa |

We can select rows based on the row-number (iris.iloc[row_number]) or the index (iris.loc[index_value]).

In this case the index value is the same as the row_number (zero-indexed). Returns a pandas series object.

iris.iloc[0]

sepal length (cm) 5.1 sepal width (cm) 3.5 petal length (cm) 1.4 petal width (cm) 0.2 species setosa Name: 0, dtype: object

Let's explore the iris dataset¶

What is the size of the dataset? rows x columns

iris.shape

(150, 5)

Let's explore the iris dataset¶

Use iris.head() to show first few rows (default n=5)

iris.head()

| sepal length (cm) | sepal width (cm) | petal length (cm) | petal width (cm) | species | |

|---|---|---|---|---|---|

| 0 | 5.1 | 3.5 | 1.4 | 0.2 | setosa |

| 1 | 4.9 | 3.0 | 1.4 | 0.2 | setosa |

| 2 | 4.7 | 3.2 | 1.3 | 0.2 | setosa |

| 3 | 4.6 | 3.1 | 1.5 | 0.2 | setosa |

| 4 | 5.0 | 3.6 | 1.4 | 0.2 | setosa |

iris.head(n=3)

| sepal length (cm) | sepal width (cm) | petal length (cm) | petal width (cm) | species | |

|---|---|---|---|---|---|

| 0 | 5.1 | 3.5 | 1.4 | 0.2 | setosa |

| 1 | 4.9 | 3.0 | 1.4 | 0.2 | setosa |

| 2 | 4.7 | 3.2 | 1.3 | 0.2 | setosa |

Let's explore the iris dataset¶

Use iris.tail() to show last few rows (default n=5)

iris.tail()

| sepal length (cm) | sepal width (cm) | petal length (cm) | petal width (cm) | species | |

|---|---|---|---|---|---|

| 145 | 6.7 | 3.0 | 5.2 | 2.3 | virginica |

| 146 | 6.3 | 2.5 | 5.0 | 1.9 | virginica |

| 147 | 6.5 | 3.0 | 5.2 | 2.0 | virginica |

| 148 | 6.2 | 3.4 | 5.4 | 2.3 | virginica |

| 149 | 5.9 | 3.0 | 5.1 | 1.8 | virginica |

iris.tail(n=3)

| sepal length (cm) | sepal width (cm) | petal length (cm) | petal width (cm) | species | |

|---|---|---|---|---|---|

| 147 | 6.5 | 3.0 | 5.2 | 2.0 | virginica |

| 148 | 6.2 | 3.4 | 5.4 | 2.3 | virginica |

| 149 | 5.9 | 3.0 | 5.1 | 1.8 | virginica |

Let's explore the iris dataset¶

Use iris.sample() to show a random subset of rows (default n=1)

iris.sample(n=5)

| sepal length (cm) | sepal width (cm) | petal length (cm) | petal width (cm) | species | |

|---|---|---|---|---|---|

| 106 | 4.9 | 2.5 | 4.5 | 1.7 | virginica |

| 82 | 5.8 | 2.7 | 3.9 | 1.2 | versicolor |

| 124 | 6.7 | 3.3 | 5.7 | 2.1 | virginica |

| 101 | 5.8 | 2.7 | 5.1 | 1.9 | virginica |

| 122 | 7.7 | 2.8 | 6.7 | 2.0 | virginica |

Specify axis='columns' to show a random subset of columns (deafult n=1)

iris.sample(n=2).sample(n=3, axis='columns')

| petal length (cm) | petal width (cm) | sepal width (cm) | |

|---|---|---|---|

| 85 | 4.5 | 1.6 | 3.4 |

| 122 | 6.7 | 2.0 | 2.8 |

Let's explore the iris dataset¶

iris.dtypes to show data type of each column

iris.dtypes

sepal length (cm) float64 sepal width (cm) float64 petal length (cm) float64 petal width (cm) float64 species object dtype: object

Let's explore the iris dataset¶

Use iris.info() to get an overview of the dataframe

iris.info()

<class 'pandas.core.frame.DataFrame'> RangeIndex: 150 entries, 0 to 149 Data columns (total 5 columns): sepal length (cm) 150 non-null float64 sepal width (cm) 150 non-null float64 petal length (cm) 150 non-null float64 petal width (cm) 150 non-null float64 species 150 non-null object dtypes: float64(4), object(1) memory usage: 6.0+ KB

Practise¶

- Go to http://tiny.cc/4c33gz

- Click on "04_pandas_dataframe_practise.py"

- Play around

Continue in 15 min.

Data cleaning and preprocessing with pandas - section 5¶

Data cleaning and preprocessing with pandas¶

To load the data, we do:

import os

import numpy

import pandas

iris = pandas.read_csv(os.path.join('data', 'iris.csv'))

Data cleaning and preprocessing¶

The iris dataset is a "clean" dataset, but real-world datasets never are...

Common data cleaning includes:

- recode missing data

- harmonize entries (spelling, etc.)

- dropping columns (redundant, not informative, etc.)

- drop rows (duplicates, missing data, etc.)

- map original values to values required for downstream analysis (binarize, convert units, etc.)

- synthetizing features (features engineering)

- normalization/scaling features

Let's make the iris dataset a bit messy...¶

# Change the spelling of the species on some of the rows

to_replace = iris.species.sample(frac=0.2, random_state=2)

iris.loc[to_replace.index, 'species'] = to_replace.str.upper()

# Replace some of the values with NaN (missing value)

iris.where(numpy.random.RandomState(seed=0).random(iris.shape) > 0.05, numpy.nan, inplace=True)

iris.head()

| sepal length (cm) | sepal width (cm) | petal length (cm) | petal width (cm) | species | |

|---|---|---|---|---|---|

| 0 | 5.1 | 3.5 | 1.4 | 0.2 | setosa |

| 1 | 4.9 | 3.0 | 1.4 | 0.2 | setosa |

| 2 | 4.7 | 3.2 | 1.3 | 0.2 | SETOSA |

| 3 | 4.6 | NaN | 1.5 | 0.2 | SETOSA |

| 4 | 5.0 | 3.6 | 1.4 | 0.2 | setosa |

Missing data¶

Use iris.isna() to visualise missing data (True if missing)

iris.isna().head()

| sepal length (cm) | sepal width (cm) | petal length (cm) | petal width (cm) | species | |

|---|---|---|---|---|---|

| 0 | False | False | False | False | False |

| 1 | False | False | False | False | False |

| 2 | False | False | False | False | False |

| 3 | False | True | False | False | False |

| 4 | False | False | False | False | False |

Use iris.isna().sum() to count missing data along each column

iris.isna().sum()

sepal length (cm) 6 sepal width (cm) 7 petal length (cm) 9 petal width (cm) 6 species 9 dtype: int64

Missing data¶

Use iris.isna().sum(axis=1) to count missing data along each row

iris.isna().sum(axis=1).head()

0 0 1 0 2 0 3 1 4 0 dtype: int64

Use iris.isna().all().sum(axis=1) to count rows with missing data across all columns

iris.isna().all(axis=1).sum()

0

Missing data¶

Use iris.dropna() to drop rows with missing data (by default a row is dropped if contains 'any' missing data)

iris.dropna().shape

(116, 5)

Use iris.dropna(how='all') to drop rows with missing data in all columns

iris.dropna(how='all').shape

(150, 5)

Use iris.dropna(subset=[column names]) to drop rows with missing data in specific columns (list of column names)

iris.dropna(subset=['sepal length (cm)', 'sepal width (cm)']).shape

(137, 5)

Filling missing data¶

Important note: whether or not to fill missing data and how depends on the dataset and the specific research question

Replace missing data with constant value (for example 0, for illustration only)

iris.fillna(0).head()

| sepal length (cm) | sepal width (cm) | petal length (cm) | petal width (cm) | species | |

|---|---|---|---|---|---|

| 0 | 5.1 | 3.5 | 1.4 | 0.2 | setosa |

| 1 | 4.9 | 3.0 | 1.4 | 0.2 | setosa |

| 2 | 4.7 | 3.2 | 1.3 | 0.2 | SETOSA |

| 3 | 4.6 | 0.0 | 1.5 | 0.2 | SETOSA |

| 4 | 5.0 | 3.6 | 1.4 | 0.2 | setosa |

Filling missing data¶

Replace missing data with constant value per column (for example median, for illustration only)

iris.fillna(iris.median()).head()

| sepal length (cm) | sepal width (cm) | petal length (cm) | petal width (cm) | species | |

|---|---|---|---|---|---|

| 0 | 5.1 | 3.5 | 1.4 | 0.2 | setosa |

| 1 | 4.9 | 3.0 | 1.4 | 0.2 | setosa |

| 2 | 4.7 | 3.2 | 1.3 | 0.2 | SETOSA |

| 3 | 4.6 | 3.0 | 1.5 | 0.2 | SETOSA |

| 4 | 5.0 | 3.6 | 1.4 | 0.2 | setosa |

Filling missing data¶

Replace missing data by linear interpolation (for illustration only)

iris.interpolate(method='linear').head()

| sepal length (cm) | sepal width (cm) | petal length (cm) | petal width (cm) | species | |

|---|---|---|---|---|---|

| 0 | 5.1 | 3.5 | 1.4 | 0.2 | setosa |

| 1 | 4.9 | 3.0 | 1.4 | 0.2 | setosa |

| 2 | 4.7 | 3.2 | 1.3 | 0.2 | SETOSA |

| 3 | 4.6 | 3.4 | 1.5 | 0.2 | SETOSA |

| 4 | 5.0 | 3.6 | 1.4 | 0.2 | setosa |

Harmonize entries¶

See existing spellings

iris.species.value_counts()

virginica 41 versicolor 39 setosa 34 SETOSA 11 VERSICOLOR 8 VIRGINICA 8 Name: species, dtype: int64

Harmonize entries¶

Use .map() with a dictionary that maps current to desidered values

iris.species.map({

'virginica': 'Virginica',

'versicolor': 'Versicolor',

'setosa': 'Setosa',

'VIRGINICA': 'Virginica',

'VERSICOLOR': 'Versicolor',

'SETOSA': 'Setosa',

}).value_counts()

Virginica 49 Versicolor 47 Setosa 45 Name: species, dtype: int64

Harmonize entries¶

Use .replace() with a dictionary that maps current to desidered values. Unlisted values remain unchanged

iris.species.replace({

'VIRGINICA': 'virginica',

'VERSICOLOR': 'versicolor',

'SETOSA': 'setosa',

}).value_counts()

virginica 49 versicolor 47 setosa 45 Name: species, dtype: int64

Harmonize entries¶

Use .replace() with a dictionary that maps current to desidered values using regular expression (https://en.wikipedia.org/wiki/Regular_expression)

iris.species.replace({

'VIRGINICA|virginica': 'Virginica', # A or B

'(?i:VERSICOLOR)': 'Versicolor', # Case insensitive groups

'[Ss].*': 'Setosa', # String starting with S or S

}, regex=True).value_counts()

Virginica 49 VerSetosa 47 Setosa 45 Name: species, dtype: int64

Harmonize entries¶

Use your own function for custom logic

def harmonize_species(old_name):

if old_name == 'SETOSA':

return 'setosa'

elif old_name == 'VIRGINICA':

return 'virginica'

elif old_name == 'VERSICOLOR':

return 'versicolor'

else:

return old_name

iris.species.apply(harmonize_species).value_counts()

virginica 49 versicolor 47 setosa 45 Name: species, dtype: int64

Harmonize entries¶

Use pandas built-in string methods for common manipulations (https://pandas.pydata.org/pandas-docs/stable/user_guide/text.html#method-summary)

iris.species.str.title().value_counts()

Virginica 49 Versicolor 47 Setosa 45 Name: species, dtype: int64

Removing data¶

Use iris.drop(index=index_to_drop) to drop rows with specific index/indices

iris.drop(index=range(0,5)).head()

| sepal length (cm) | sepal width (cm) | petal length (cm) | petal width (cm) | species | |

|---|---|---|---|---|---|

| 5 | 5.4 | 3.9 | 1.7 | 0.4 | SETOSA |

| 6 | 4.6 | 3.4 | 1.4 | 0.3 | NaN |

| 7 | 5.0 | 3.4 | 1.5 | 0.2 | setosa |

| 8 | 4.4 | 2.9 | 1.4 | 0.2 | setosa |

| 9 | 4.9 | 3.1 | 1.5 | 0.1 | setosa |

Removing data¶

Use iris.drop(columns=columns_to_drop) to drop columns with specific column(s)

iris.drop(columns=['sepal length (cm)', 'sepal width (cm)']).head()

| petal length (cm) | petal width (cm) | species | |

|---|---|---|---|

| 0 | 1.4 | 0.2 | setosa |

| 1 | 1.4 | 0.2 | setosa |

| 2 | 1.3 | 0.2 | SETOSA |

| 3 | 1.5 | 0.2 | SETOSA |

| 4 | 1.4 | 0.2 | setosa |

Removing data¶

Use .drop_duplicated() to drop rows with duplicated values

iris.drop_duplicates().shape

(150, 5)

Discretize numeric data¶

Use pandas.qcut() to discretize in quantiles

pandas.qcut(iris['sepal length (cm)'], q=3).value_counts(sort=False)

(4.2989999999999995, 5.4] 51 (5.4, 6.3] 53 (6.3, 7.9] 40 Name: sepal length (cm), dtype: int64

Discretize numeric data¶

Use pandas.cut() to discretize in n equally wide bins

pandas.cut(iris['sepal length (cm)'], bins=3).value_counts(sort=False)

(4.296, 5.5] 58 (5.5, 6.7] 67 (6.7, 7.9] 19 Name: sepal length (cm), dtype: int64

Discretize numeric data¶

Use pandas.cut() to discretize with a custom bin edges (for example, [0, mean, Inf))

pandas.cut(iris['sepal length (cm)'], bins=[0, iris['sepal length (cm)'].mean(), numpy.Inf]).value_counts(sort=False)

(0.0, 5.833] 77 (5.833, inf] 67 Name: sepal length (cm), dtype: int64

Unit conversion¶

Use standard arithmetic operations to scale values in columns (for example, convert measurements to meters)

iris['sepal length (cm)'].head() / 100

0 0.051 1 0.049 2 0.047 3 0.046 4 0.050 Name: sepal length (cm), dtype: float64

Synthetizing new columns by combining existing columns¶

Use standard arithmetic operations to construct a new column (for example, compute ratio length to width for sepal)

(iris['sepal length (cm)'] / iris['sepal width (cm)']).head()

0 1.457143 1 1.633333 2 1.468750 3 NaN 4 1.388889 dtype: float64

Putting all together¶

Drop rows with missing values and save result into a new dataframe called iris_clean

iris_clean = iris.dropna().copy()

Harmonize species spelling and save results back in the species column

iris_clean['species'] = iris_clean['species'].str.title()

Synthetize new column with ratio between length and width for sepals and petals

iris_clean['sepal_length_over_width_ratio'] = (iris_clean['sepal length (cm)'] / iris_clean['sepal width (cm)'])

iris_clean['petal_length_over_width_ratio'] = (iris_clean['petal length (cm)'] / iris_clean['petal width (cm)'])

Drop redundant columns

iris_clean = iris_clean.drop(columns=iris.columns[0:4])

Resulting dataframe¶

iris_clean.head()

| species | sepal_length_over_width_ratio | petal_length_over_width_ratio | |

|---|---|---|---|

| 0 | Setosa | 1.457143 | 7.00 |

| 1 | Setosa | 1.633333 | 7.00 |

| 2 | Setosa | 1.468750 | 6.50 |

| 4 | Setosa | 1.388889 | 7.00 |

| 5 | Setosa | 1.384615 | 4.25 |

Practise¶

- Go to http://tiny.cc/4c33gz

- Click on "05_pandas_data_cleaning_and_preprocessing_practise.py"

- Play around

Continue in 15 min.

Descriptive statistics with pandas - section 6¶

Descriptive statistics for the iris dataset¶

Import libraries and dataset

import os

import pandas

import numpy

iris = pandas.read_csv(os.path.join('data', 'iris.csv'))

iris.head()

| sepal length (cm) | sepal width (cm) | petal length (cm) | petal width (cm) | species | |

|---|---|---|---|---|---|

| 0 | 5.1 | 3.5 | 1.4 | 0.2 | setosa |

| 1 | 4.9 | 3.0 | 1.4 | 0.2 | setosa |

| 2 | 4.7 | 3.2 | 1.3 | 0.2 | setosa |

| 3 | 4.6 | 3.1 | 1.5 | 0.2 | setosa |

| 4 | 5.0 | 3.6 | 1.4 | 0.2 | setosa |

Descriptive statistics for the iris dataset¶

Use iris.mean() to get mean across all rows for each numeric column

iris.mean()

sepal length (cm) 5.843333 sepal width (cm) 3.057333 petal length (cm) 3.758000 petal width (cm) 1.199333 dtype: float64

Use iris.mean(axis=1) to get mean across all numeric columns for each row

iris.mean(axis=1)

0 2.550

1 2.375

2 2.350

3 2.350

4 2.550

...

145 4.300

146 3.925

147 4.175

148 4.325

149 3.950

Length: 150, dtype: float64

Descriptive statistics for the iris dataset¶

Use iris.median() to get median across all rows for each numeric column

iris.median()

sepal length (cm) 5.80 sepal width (cm) 3.00 petal length (cm) 4.35 petal width (cm) 1.30 dtype: float64

Descriptive statistics for the iris dataset¶

Similar common aggregation functions are available:

- `.sum()`

- `.quantile()`

- `.count()`

- `.min()`

- `.max()`

- `.std()`

- `.abs()`

- `.corr()`

- ....

Check documentation at https://pandas.pydata.org/pandas-docs/stable/reference/frame.html#computations-descriptive-stats

Can be applied to full dataframe or to selected column(s)

iris['sepal length (cm)'].quantile(0.5)

5.8

iris[['sepal length (cm)', 'sepal width (cm)']].quantile(0.5)

sepal length (cm) 5.8 sepal width (cm) 3.0 Name: 0.5, dtype: float64

Descriptive statistics for the iris dataset¶

# Compute count

iris['sepal length (cm)'].count()

150

# Compute min

iris['sepal length (cm)'].min()

4.3

# Compute max

iris['sepal length (cm)'].max()

7.9

# Compute standard deviation

iris['sepal length (cm)'].std()

0.828066127977863

Descriptive statistics for the iris dataset¶

# Compute abs

iris['sepal length (cm)'].abs()

0 5.1

1 4.9

2 4.7

3 4.6

4 5.0

...

145 6.7

146 6.3

147 6.5

148 6.2

149 5.9

Name: sepal length (cm), Length: 150, dtype: float64

Descriptive statistics for the iris dataset¶

# Compute correlation (by deafult uses `method='pearson'`)

iris.corr()

| sepal length (cm) | sepal width (cm) | petal length (cm) | petal width (cm) | |

|---|---|---|---|---|

| sepal length (cm) | 1.000000 | -0.117570 | 0.871754 | 0.817941 |

| sepal width (cm) | -0.117570 | 1.000000 | -0.428440 | -0.366126 |

| petal length (cm) | 0.871754 | -0.428440 | 1.000000 | 0.962865 |

| petal width (cm) | 0.817941 | -0.366126 | 0.962865 | 1.000000 |

# Compute Spearman correlation

iris.corr(method='spearman')

| sepal length (cm) | sepal width (cm) | petal length (cm) | petal width (cm) | |

|---|---|---|---|---|

| sepal length (cm) | 1.000000 | -0.166778 | 0.881898 | 0.834289 |

| sepal width (cm) | -0.166778 | 1.000000 | -0.309635 | -0.289032 |

| petal length (cm) | 0.881898 | -0.309635 | 1.000000 | 0.937667 |

| petal width (cm) | 0.834289 | -0.289032 | 0.937667 | 1.000000 |

Descriptive statistics for the iris dataset¶

Other useful functions:

- `.nsmallest`

- `.nlargest`

- `.idxmin()`

- `.idxmax()`.nlargest()and.nsmallest()are applied to a specific column.idxmax()and.idxmin()can be applied to either a full dataframe or to selected column(s)

Descriptive statistics for the iris dataset¶

Use iris.nsmallest() to identify rows with highest measurement for a column

iris.nsmallest(n=3, columns='petal length (cm)')

| sepal length (cm) | sepal width (cm) | petal length (cm) | petal width (cm) | species | |

|---|---|---|---|---|---|

| 22 | 4.6 | 3.6 | 1.0 | 0.2 | setosa |

| 13 | 4.3 | 3.0 | 1.1 | 0.1 | setosa |

| 14 | 5.8 | 4.0 | 1.2 | 0.2 | setosa |

Verify results

iris['petal length (cm)'].min()

1.0

iris['petal length (cm)'].idxmin()

22

iris['petal length (cm)'].sort_values().head(3)

22 1.0 13 1.1 14 1.2 Name: petal length (cm), dtype: float64

Descriptive statistics for the iris dataset¶

Use iris.nlargest() to identify rows with highest measurement for a column

iris.nlargest(n=3, columns='petal length (cm)')

| sepal length (cm) | sepal width (cm) | petal length (cm) | petal width (cm) | species | |

|---|---|---|---|---|---|

| 118 | 7.7 | 2.6 | 6.9 | 2.3 | virginica |

| 117 | 7.7 | 3.8 | 6.7 | 2.2 | virginica |

| 122 | 7.7 | 2.8 | 6.7 | 2.0 | virginica |

Verify results

iris['petal length (cm)'].max()

6.9

iris['petal length (cm)'].idxmax()

118

iris['petal length (cm)'].sort_values(ascending=False).head(3)

118 6.9 122 6.7 117 6.7 Name: petal length (cm), dtype: float64

Descriptive statistics for the iris dataset¶

Use iris.apply() to apply a custom function. Note: subset to numerical columns

iris.iloc[:, 0:4].head()

| sepal length (cm) | sepal width (cm) | petal length (cm) | petal width (cm) | |

|---|---|---|---|---|

| 0 | 5.1 | 3.5 | 1.4 | 0.2 |

| 1 | 4.9 | 3.0 | 1.4 | 0.2 |

| 2 | 4.7 | 3.2 | 1.3 | 0.2 |

| 3 | 4.6 | 3.1 | 1.5 | 0.2 |

| 4 | 5.0 | 3.6 | 1.4 | 0.2 |

iris.iloc[:, 0:4].apply(numpy.mean)

sepal length (cm) 5.843333 sepal width (cm) 3.057333 petal length (cm) 3.758000 petal width (cm) 1.199333 dtype: float64

Descriptive statistics for the iris dataset¶

Use iris.aggregate() to apply one or more custom functions

iris.iloc[:, 0:4].aggregate(lambda x: numpy.mean(x))

sepal length (cm) 5.843333 sepal width (cm) 3.057333 petal length (cm) 3.758000 petal width (cm) 1.199333 dtype: float64

iris.iloc[:, 0:4].aggregate(lambda x: [numpy.mean(x), numpy.std(x)])

sepal length (cm) [5.843333333333334, 0.8253012917851409] sepal width (cm) [3.0573333333333337, 0.4344109677354946] petal length (cm) [3.7580000000000005, 1.759404065775303] petal width (cm) [1.1993333333333336, 0.7596926279021594] dtype: object

Descriptive statistics for the iris dataset¶

Use iris.aggregate() to apply one or more custom functions

iris.iloc[:, 0:4].aggregate(['sum', 'mean', numpy.median])

| sepal length (cm) | sepal width (cm) | petal length (cm) | petal width (cm) | |

|---|---|---|---|---|

| sum | 876.500000 | 458.600000 | 563.700 | 179.900000 |

| mean | 5.843333 | 3.057333 | 3.758 | 1.199333 |

| median | 5.800000 | 3.000000 | 4.350 | 1.300000 |

Descriptive statistics for the iris dataset¶

Use iris.aggregate() to compute range (max - min)

iris.iloc[:, 0:4].aggregate(lambda x: [numpy.max(x) - numpy.min(x)])

sepal length (cm) [3.6000000000000005] sepal width (cm) [2.4000000000000004] petal length (cm) [5.9] petal width (cm) [2.4] dtype: object

Use iris.aggregate() to compute interquantile range (75th percentile - 25th percentile)

iris.iloc[:, 0:4].aggregate(lambda x: [numpy.quantile(x, 0.75) - numpy.quantile(x, 0.25)])

sepal length (cm) [1.3000000000000007] sepal width (cm) [0.5] petal length (cm) [3.4999999999999996] petal width (cm) [1.5] dtype: object

Descriptive statistics for the iris dataset¶

iris.iloc[:, 0:4].aggregate(lambda x: numpy.quantile(x, [0.025, 0.25, 0.5, 0.75, 0.975]))

| sepal length (cm) | sepal width (cm) | petal length (cm) | petal width (cm) | |

|---|---|---|---|---|

| 0 | 4.4725 | 2.2725 | 1.2725 | 0.1 |

| 1 | 5.1000 | 2.8000 | 1.6000 | 0.3 |

| 2 | 5.8000 | 3.0000 | 4.3500 | 1.3 |

| 3 | 6.4000 | 3.3000 | 5.1000 | 1.8 |

| 4 | 7.7000 | 3.9275 | 6.4550 | 2.4 |

Descriptive statistics for the iris dataset¶

Use iris.describe() to get summary statistics for the measurements

iris.describe()

| sepal length (cm) | sepal width (cm) | petal length (cm) | petal width (cm) | |

|---|---|---|---|---|

| count | 150.000000 | 150.000000 | 150.000000 | 150.000000 |

| mean | 5.843333 | 3.057333 | 3.758000 | 1.199333 |

| std | 0.828066 | 0.435866 | 1.765298 | 0.762238 |

| min | 4.300000 | 2.000000 | 1.000000 | 0.100000 |

| 25% | 5.100000 | 2.800000 | 1.600000 | 0.300000 |

| 50% | 5.800000 | 3.000000 | 4.350000 | 1.300000 |

| 75% | 6.400000 | 3.300000 | 5.100000 | 1.800000 |

| max | 7.900000 | 4.400000 | 6.900000 | 2.500000 |

Descriptive statistics for the iris dataset¶

Use iris.describe(include='all') to get summary statistics for all the columns

iris.describe(include='all')

| sepal length (cm) | sepal width (cm) | petal length (cm) | petal width (cm) | species | |

|---|---|---|---|---|---|

| count | 150.000000 | 150.000000 | 150.000000 | 150.000000 | 150 |

| unique | NaN | NaN | NaN | NaN | 3 |

| top | NaN | NaN | NaN | NaN | versicolor |

| freq | NaN | NaN | NaN | NaN | 50 |

| mean | 5.843333 | 3.057333 | 3.758000 | 1.199333 | NaN |

| std | 0.828066 | 0.435866 | 1.765298 | 0.762238 | NaN |

| min | 4.300000 | 2.000000 | 1.000000 | 0.100000 | NaN |

| 25% | 5.100000 | 2.800000 | 1.600000 | 0.300000 | NaN |

| 50% | 5.800000 | 3.000000 | 4.350000 | 1.300000 | NaN |

| 75% | 6.400000 | 3.300000 | 5.100000 | 1.800000 | NaN |

| max | 7.900000 | 4.400000 | 6.900000 | 2.500000 | NaN |

Descriptive statistics for the iris dataset¶

Use iris.value_counts() to get a breakdown of each species in the dataset

iris.species.value_counts()

versicolor 50 virginica 50 setosa 50 Name: species, dtype: int64

Show results as fraction

iris.species.value_counts(normalize=True)

versicolor 0.333333 virginica 0.333333 setosa 0.333333 Name: species, dtype: float64

Descriptive statistics for the iris dataset by flower species¶

iris.groupby('species').describe().style.apply(lambda x: ['background: lightblue' if x.name == '50%' else '' for i in x], axis=1)

| sepal length (cm) | sepal width (cm) | petal length (cm) | petal width (cm) | |||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| count | mean | std | min | 25% | 50% | 75% | max | count | mean | std | min | 25% | 50% | 75% | max | count | mean | std | min | 25% | 50% | 75% | max | count | mean | std | min | 25% | 50% | 75% | max | |

| species | ||||||||||||||||||||||||||||||||

| setosa | 50 | 5.006 | 0.35249 | 4.3 | 4.8 | 5 | 5.2 | 5.8 | 50 | 3.428 | 0.379064 | 2.3 | 3.2 | 3.4 | 3.675 | 4.4 | 50 | 1.462 | 0.173664 | 1 | 1.4 | 1.5 | 1.575 | 1.9 | 50 | 0.246 | 0.105386 | 0.1 | 0.2 | 0.2 | 0.3 | 0.6 |

| versicolor | 50 | 5.936 | 0.516171 | 4.9 | 5.6 | 5.9 | 6.3 | 7 | 50 | 2.77 | 0.313798 | 2 | 2.525 | 2.8 | 3 | 3.4 | 50 | 4.26 | 0.469911 | 3 | 4 | 4.35 | 4.6 | 5.1 | 50 | 1.326 | 0.197753 | 1 | 1.2 | 1.3 | 1.5 | 1.8 |

| virginica | 50 | 6.588 | 0.63588 | 4.9 | 6.225 | 6.5 | 6.9 | 7.9 | 50 | 2.974 | 0.322497 | 2.2 | 2.8 | 3 | 3.175 | 3.8 | 50 | 5.552 | 0.551895 | 4.5 | 5.1 | 5.55 | 5.875 | 6.9 | 50 | 2.026 | 0.27465 | 1.4 | 1.8 | 2 | 2.3 | 2.5 |

Stats beyond pandas built-in functions¶

There are a few main general pourpose libraries for stats in python:

- **statsmodels**: https://www.statsmodels.org/stable/index.html

- **scipy**: https://www.scipy.org/

-...

Simple example using statsmodels¶

import statsmodels.formula.api

import seaborn

seaborn.swarmplot(x='species', y='petal length (cm)', data=iris);

Simple example using statsmodels¶

statsmodels.formula.api.ols('Q("petal length (cm)") ~ species', data=iris).fit().summary()

| Dep. Variable: | Q("petal length (cm)") | R-squared: | 0.941 |

|---|---|---|---|

| Model: | OLS | Adj. R-squared: | 0.941 |

| Method: | Least Squares | F-statistic: | 1180. |

| Date: | Sat, 25 Jul 2020 | Prob (F-statistic): | 2.86e-91 |

| Time: | 18:20:13 | Log-Likelihood: | -84.847 |

| No. Observations: | 150 | AIC: | 175.7 |

| Df Residuals: | 147 | BIC: | 184.7 |

| Df Model: | 2 | ||

| Covariance Type: | nonrobust |

| coef | std err | t | P>|t| | [0.025 | 0.975] | |

|---|---|---|---|---|---|---|

| Intercept | 1.4620 | 0.061 | 24.023 | 0.000 | 1.342 | 1.582 |

| species[T.versicolor] | 2.7980 | 0.086 | 32.510 | 0.000 | 2.628 | 2.968 |

| species[T.virginica] | 4.0900 | 0.086 | 47.521 | 0.000 | 3.920 | 4.260 |

| Omnibus: | 4.394 | Durbin-Watson: | 1.997 |

|---|---|---|---|

| Prob(Omnibus): | 0.111 | Jarque-Bera (JB): | 5.366 |

| Skew: | 0.122 | Prob(JB): | 0.0683 |

| Kurtosis: | 3.894 | Cond. No. | 3.73 |

Warnings:

[1] Standard Errors assume that the covariance matrix of the errors is correctly specified.

Using R packages from python with rpy2¶

Some statistical functions may be available in R, but not in python. For this purpose you can call R packages from python using rpy2 (https://rpy2.bitbucket.io/).

# Import rpy2 modules

import rpy2.robjects

import rpy2.robjects.packages

import rpy2.robjects.pandas2ri

# Save the R 'stats' package as a python variable

stats = rpy2.robjects.packages.importr('stats')

# Convert pandas dataframes to R objects

with rpy2.robjects.conversion.localconverter(

rpy2.robjects.default_converter + rpy2.robjects.pandas2ri.converter):

# Run model in R

model = stats.lm('petal_length ~ species',

data=iris.rename(columns={'petal length (cm)': 'petal_length'}))

summary = stats.summary_lm(model)

Using R packages from python with rpy2¶

# Extract the coefficients

coef = stats.coef(summary)

# Convert coefficients to python dataframe

with rpy2.robjects.conversion.localconverter(

rpy2.robjects.default_converter + rpy2.robjects.pandas2ri.converter):

coef = pandas.DataFrame(rpy2.robjects.conversion.rpy2py(coef), index=coef.rownames, columns=coef.colnames)

coef

| Estimate | Std. Error | t value | Pr(>|t|) | |

|---|---|---|---|---|

| (Intercept) | 1.462 | 0.060858 | 24.022945 | 9.303052e-53 |

| speciesversicolor | 2.798 | 0.086067 | 32.509597 | 5.254587e-69 |

| speciesvirginica | 4.090 | 0.086067 | 47.521176 | 4.106139e-91 |

Practise¶

- Go to http://tiny.cc/4c33gz

- Click on "05_pandas_data_cleaning_and_preprocessing_practise.py"

- Play around

Continue in 10 min.

Data plotting - section 07¶

Data plotting¶

- Exploratory data analysis (EDA) is a crucial part of any research project

- quality control (missing data, outlier detection, ...)

- descriptive statistics

- visualization

- insights to guide downstream analysis

Image from http://www.codeheroku.com/post.html?name=Introduction%20to%20Exploratory%20Data%20Analysis%20(EDA)

Image from http://www.codeheroku.com/post.html?name=Introduction%20to%20Exploratory%20Data%20Analysis%20(EDA)

Beyond built-in plotting with pandas¶

(A few) of python's plotting libraries:

- matplotlib: https://matplotlib.org/

- seaborn: https://seaborn.pydata.org/

- plotnine (ggplot-like): https://plotnine.readthedocs.io/en/stable/

- plotly: https://plot.ly/

- bokeh: https://docs.bokeh.org/en/latest/index.html

- ...

Matplotlib¶

Matplotlib is a Python 2D plotting library which produces publication quality figures in a variety of hardcopy formats and interactive environments across platforms.

Matplotlib tries to make easy things easy and hard things possible. You can generate plots, histograms, power spectra, bar charts, errorcharts, scatterplots, etc., with just a few lines of code. For examples, see the sample plots and thumbnail gallery.

For the power user, you have full control of line styles, font properties, axes properties, etc, via an object oriented interface or via a set of functions familiar to MATLAB users.

Seaborn¶

Seaborn is a Python visualization library based on matplotlib. It provides a high-level interface for drawing attractive statistical graphics. (https://seaborn.pydata.org/index.html)

- Examples gallery: https://seaborn.pydata.org/examples/index.html

Let's get plotting the iris dataset¶

import os

import numpy

import pandas

import matplotlib.pyplot

import seaborn

iris = pandas.read_csv(os.path.join('data', 'iris.csv'))

Univariate analysis - swarm-plot¶

seaborn.swarmplot(y='sepal length (cm)', color='k', data=iris);

Grouped swarm-plot¶

seaborn.swarmplot(x='species', y='sepal length (cm)', data=iris.reset_index());

Grouped box-plot¶

seaborn.boxplot(x='species', y='sepal length (cm)', data=iris.reset_index());

Grouped violin-plot¶

seaborn.violinplot(x='species', y='sepal length (cm)', data=iris.reset_index());

Combining multiple univariate plots

seaborn.violinplot(x='species', y='sepal length (cm)', data=iris.reset_index());

seaborn.swarmplot(x='species', y='sepal length (cm)', color='w', data=iris.reset_index());

Compose a figure with multiple sub-plots¶

fig, axes = matplotlib.pyplot.subplots(nrows=1, ncols=4, figsize=(24, 8))

# Dot plot with no grouping variable

seaborn.swarmplot(y='sepal length (cm)', color='k', data=iris, ax=axes[0])

# Dot plot grouped by species

seaborn.swarmplot(x='species', y='sepal length (cm)', dodge=True, data=iris.reset_index(), ax=axes[1])

# Boxplot grouped by species

seaborn.boxplot(x='species', y='sepal length (cm)', dodge=True, data=iris.reset_index(), ax=axes[2])

# Violin plot grouped by species

seaborn.violinplot(x='species', y='sepal length (cm)', dodge=True, data=iris.reset_index(), ax=axes[3]);

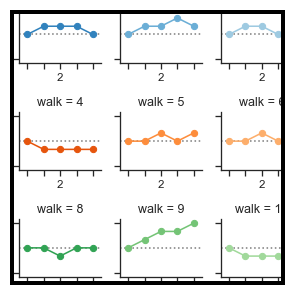

Compose a figure with a grid of identical sub-plots (FacetGrid)¶

Figure-level interface for drawing categorical plots onto a FacetGrid.

Figure-level interface for drawing relational plots onto a FacetGrid.

x, y, hue : names of variables in data Inputs for plotting long-form data. See examples for interpretation.

Reshape a dataframe from a wide-format to a long-format¶

Wide-format:

iris.loc[[6, 92, 140]]

| sepal length (cm) | sepal width (cm) | petal length (cm) | petal width (cm) | species | |

|---|---|---|---|---|---|

| 6 | 4.6 | 3.4 | 1.4 | 0.3 | setosa |

| 92 | 5.8 | 2.6 | 4.0 | 1.2 | versicolor |

| 140 | 6.7 | 3.1 | 5.6 | 2.4 | virginica |

Reshape a dataframe from a wide-format to a long-format¶

Let's stack the table and add a grouping variable to convert to a tall format

iris_long = iris.set_index('species', append=True).stack().to_frame('value')

iris_long.index.names = ['id', 'species', 'feature']

iris_long.loc[[6, 92, 140]]

| value | |||

|---|---|---|---|

| id | species | feature | |

| 6 | setosa | sepal length (cm) | 4.6 |

| sepal width (cm) | 3.4 | ||

| petal length (cm) | 1.4 | ||

| petal width (cm) | 0.3 | ||

| 92 | versicolor | sepal length (cm) | 5.8 |

| sepal width (cm) | 2.6 | ||

| petal length (cm) | 4.0 | ||

| petal width (cm) | 1.2 | ||

| 140 | virginica | sepal length (cm) | 6.7 |

| sepal width (cm) | 3.1 | ||

| petal length (cm) | 5.6 | ||

| petal width (cm) | 2.4 |

Draw a grid of sub-plots where each categorical plot includes a single feature¶

seaborn.catplot(x='species', y='value', col='feature', sharey=False, data=iris_long.reset_index());

Bivariate analysis - scatter-plot¶

seaborn.scatterplot(x='sepal length (cm)', y='petal width (cm)', hue='species', data=iris);

Bivariate analysis - scatterplot + histograms (joint-plot)¶

seaborn.jointplot(x='sepal length (cm)', y='petal width (cm)', kind='reg', data=iris);

Draw a grid of sub-plots where each relational plot includes a single feature¶

seaborn.relplot(x='sepal length (cm)', y='petal width (cm)', col='species', data=iris.reset_index());

Visualize relationship and distribution between feature pairs¶

seaborn.pairplot(iris, hue='species');

Plot heatmap to visualize correlation among features¶

Practise¶

- Go to http://tiny.cc/4c33gz

- Click on "07_data_plotting_practise.py"

- Play around

Continue in 10 min.

Full Example - Dogs and (dog bites) in New York - section 8¶

Dogs in New York¶

Data from New York Open Data (https://opendata.cityofnewyork.us/)

- Dogs licensing dataset: https://data.cityofnewyork.us/Health/NYC-Dog-Licensing-Dataset/nu7n-tubp

- Dog bites dataset: https://data.cityofnewyork.us/Health/DOHMH-Dog-Bite-Data/rsgh-akpg

Dogs in New York - data import¶

Import libraries and dataset

import os

import pandas

import matplotlib.pyplot

dogs = pandas.read_csv(os.path.join('data', 'NYC_Dog_Licensing_Dataset.csv'),

index_col=0,

na_values=['Unknown', 'UNKNOWN', 'NAME NOT PROVIDED'])

dogs.head()

| AnimalName | AnimalGender | AnimalBirthMonth | BreedName | Borough | ZipCode | LicenseIssuedDate | LicenseExpiredDate | Extract Year | |

|---|---|---|---|---|---|---|---|---|---|

| RowNumber | |||||||||

| 1 | PAIGE | F | 2014 | American Pit Bull Mix / Pit Bull Mix | NaN | 10035 | 09/12/2014 | 09/12/2017 | 2016 |

| 2 | YOGI | M | 2010 | Boxer | NaN | 10465 | 09/12/2014 | 10/02/2017 | 2016 |

| 3 | ALI | M | 2014 | Basenji | NaN | 10013 | 09/12/2014 | 09/12/2019 | 2016 |

| 4 | QUEEN | F | 2013 | Akita Crossbreed | NaN | 10013 | 09/12/2014 | 09/12/2017 | 2016 |

| 5 | LOLA | F | 2009 | Maltese | NaN | 10028 | 09/12/2014 | 10/09/2017 | 2016 |

Dogs in New York - data cleaning¶

dogs.rename(columns={

'BreedName': 'Breed',

'AnimalGender': 'Gender'},

inplace=True)

Dogs in New York - data aggregation¶

Count number of dogs per breed and gender

dogs_by_breed_and_gender = dogs.groupby(['Breed', 'Gender']).size()

dogs_by_breed_and_gender.head()

Breed Gender

Affenpinscher F 43

M 48

Afghan Hound F 26

M 33

Afghan Hound Crossbreed F 6

dtype: int64

Dogs in New York - data reshaping¶

Use unstack to reshape the table from tall to wide and put female- and male- counts in separate columns.

dogs_by_breed = dogs_by_breed_and_gender.unstack('Gender')

dogs_by_breed.head()

| Gender | F | M |

|---|---|---|

| Breed | ||

| Affenpinscher | 43.0 | 48.0 |

| Afghan Hound | 26.0 | 33.0 |

| Afghan Hound Crossbreed | 6.0 | 1.0 |

| Airedale Terrier | 74.0 | 81.0 |

| Akita | 124.0 | 234.0 |

Dogs in New York - data processing¶

Let's add another column for total number of dogs per breed

dogs_by_breed['Total'] = dogs_by_breed.sum(axis=1)

dogs_by_breed.head()

| Gender | F | M | Total |

|---|---|---|---|

| Breed | |||

| Affenpinscher | 43.0 | 48.0 | 91.0 |

| Afghan Hound | 26.0 | 33.0 | 59.0 |

| Afghan Hound Crossbreed | 6.0 | 1.0 | 7.0 |

| Airedale Terrier | 74.0 | 81.0 | 155.0 |

| Akita | 124.0 | 234.0 | 358.0 |

Dogs in New York - data processing¶

Let's focus on the most common breeds (top 50)

dogs_by_breed = dogs_by_breed.sort_values(by='Total', ascending=False).head(50)

dogs_by_breed.head()

| Gender | F | M | Total |

|---|---|---|---|

| Breed | |||

| Yorkshire Terrier | 9331.0 | 12588.0 | 21919.0 |

| Shih Tzu | 8414.0 | 11214.0 | 19628.0 |

| Chihuahua | 7483.0 | 8164.0 | 15647.0 |

| Maltese | 4802.0 | 6586.0 | 11388.0 |

| Labrador Retriever | 5548.0 | 5779.0 | 11327.0 |

Dogs in New York - data plotting¶

dogs_by_breed[['F', 'M']].plot.bar(stacked=True, color=['#fa9fb5', '#6baed6'], figsize=(16,6));

matplotlib.pyplot.gca().set_ylabel('Number of dogs');

Dog bites in New York¶

Joining with another dataset

dog_bites = pandas.read_csv(os.path.join('data', 'DOHMH_Dog_Bite_Data.csv'),

index_col=0,

na_values=['U'])

dog_bites.head()

| DateOfBite | Species | Breed | Age | Gender | SpayNeuter | Borough | ZipCode | |

|---|---|---|---|---|---|---|---|---|

| UniqueID | ||||||||

| 1 | January 02 2015 | DOG | Poodle, Standard | 3 | M | True | Brooklyn | 11238 |

| 2 | January 02 2015 | DOG | HUSKY | NaN | NaN | False | Brooklyn | 11249 |

| 3 | January 02 2015 | DOG | NaN | NaN | NaN | False | Brooklyn | NaN |

| 4 | January 01 2015 | DOG | American Pit Bull Terrier/Pit Bull | 6 | M | False | Brooklyn | 11221 |

| 5 | January 03 2015 | DOG | American Pit Bull Terrier/Pit Bull | 1 | M | False | Brooklyn | 11207 |

Dog bites in New York - data aggregation¶

Let's group by breed and gender like the previous data-set

dog_bites_by_breed_and_gender = dog_bites.groupby(['Breed', 'Gender']).size()

dog_bites_by_breed_and_gender.head()

Breed Gender /SHIH TZU MIX M 1 2 DOGS: TERR X & DOBERMAN F 1 AFRICAN BOERBOEL M 1 AIREDALE TERRIER M 1 AKITA/CHOW CHOW M 1 dtype: int64

Combine dogs info with dig bites info in New York - data join¶

Now we can join the two data-sets together, to get both number of dogs and number of bites per breed and gender

bites_per_dog = dogs_by_breed_and_gender.to_frame('dogs').join(

dog_bites_by_breed_and_gender.to_frame('bites'), how='outer').\

sort_values(by='dogs', ascending=False)

bites_per_dog.head()

| dogs | bites | ||

|---|---|---|---|

| Breed | Gender | ||

| Yorkshire Terrier | M | 12588.0 | 110.0 |

| Shih Tzu | M | 11214.0 | 203.0 |

| Yorkshire Terrier | F | 9331.0 | 44.0 |

| Shih Tzu | F | 8414.0 | 66.0 |

| Chihuahua | M | 8164.0 | 144.0 |

Dogs in New York - data cleaning¶

For some breeds we may not have information about number of dogs or bites.

- If there are no known recorded bites for a breed, we replace it with 0.

- There may also be cases where we have a recorded bite for a breed where we have no known dog count. This is probably due to breed names not being spelled correctly. We drop these rows.bites_per_dog[bites_per_dog.isna().any(axis=1)].sample(5)

| dogs | bites | ||

|---|---|---|---|

| Breed | Gender | ||

| BOX TERR X | F | NaN | 1.0 |

| TERRIER X | M | NaN | 6.0 |

| Schnauzer, Miniature Crossbreed | F | 86.0 | NaN |

| MUTT | F | NaN | 7.0 |

| French Spaniel | F | 7.0 | NaN |

Dogs in New York - data cleaning¶

bites_per_dog.dropna(subset=['dogs'], inplace=True)

bites_per_dog.fillna(0, inplace=True)

bites_per_dog.head()

| dogs | bites | ||

|---|---|---|---|

| Breed | Gender | ||

| Yorkshire Terrier | M | 12588.0 | 110.0 |

| Shih Tzu | M | 11214.0 | 203.0 |

| Yorkshire Terrier | F | 9331.0 | 44.0 |

| Shih Tzu | F | 8414.0 | 66.0 |

| Chihuahua | M | 8164.0 | 144.0 |

Bites per dog breed in New York¶

Now we can caulculate the number of bites per dog for each breed/gender.

bites_per_dog['bites_per_dog'] = bites_per_dog.bites / bites_per_dog.dogs

bites_per_dog.head()

| dogs | bites | bites_per_dog | ||

|---|---|---|---|---|

| Breed | Gender | |||

| Yorkshire Terrier | M | 12588.0 | 110.0 | 0.008738 |

| Shih Tzu | M | 11214.0 | 203.0 | 0.018102 |

| Yorkshire Terrier | F | 9331.0 | 44.0 | 0.004715 |

| Shih Tzu | F | 8414.0 | 66.0 | 0.007844 |

| Chihuahua | M | 8164.0 | 144.0 | 0.017638 |

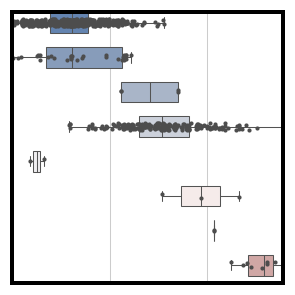

Bites per dog breed in New York¶

Lets subset to breeds with many (>5000) data-points

bites_per_dog = bites_per_dog[bites_per_dog.dogs > 5000]

bites_per_dog.head()

| dogs | bites | bites_per_dog | ||

|---|---|---|---|---|

| Breed | Gender | |||

| Yorkshire Terrier | M | 12588.0 | 110.0 | 0.008738 |

| Shih Tzu | M | 11214.0 | 203.0 | 0.018102 |

| Yorkshire Terrier | F | 9331.0 | 44.0 | 0.004715 |

| Shih Tzu | F | 8414.0 | 66.0 | 0.007844 |

| Chihuahua | M | 8164.0 | 144.0 | 0.017638 |

Bites per dog breed in New York - safest and most dangerous dog breeds are revelead¶

ax = bites_per_dog.bites_per_dog.sort_values().plot.bar(figsize=(16,3.5));

ax.set_ylabel('Bites per dog');

ax.set_xticklabels(ax.get_xticklabels(), rotation=20);

Image from

Image from